pre() and post() 62ref Property 78populate() Syntax 80explain() 115Mongoose is an object-document mapping (ODM) framework for Node.js and MongoDB. It is the most popular database framework for Node.js in terms of npm downloads (over 800,000 per month) and usage on GitHub (over 939,000 repos depend on Mongoose).

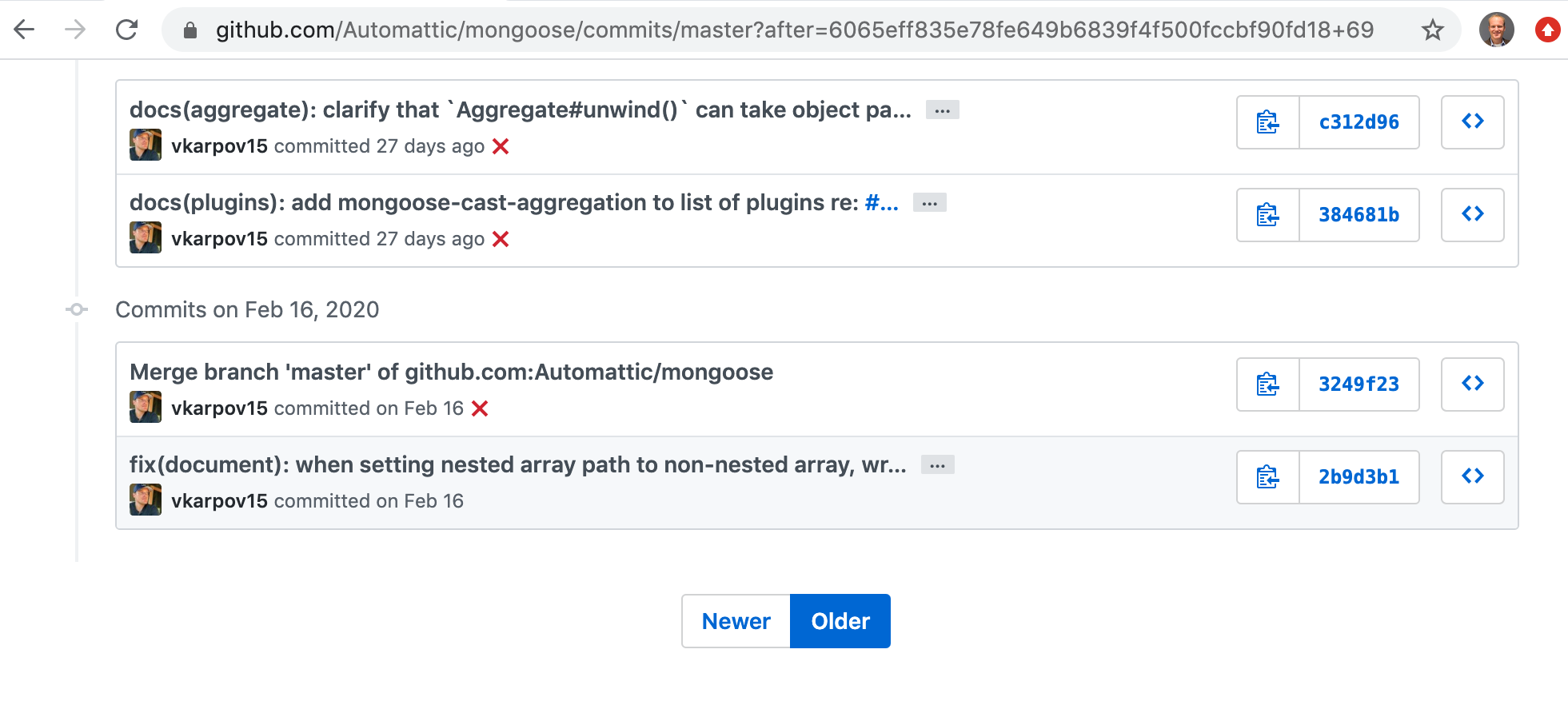

I'm Valeri Karpov, the maintainer of Mongoose since April 2014. Mongoose was one of the first Node.js projects when it was written in 2010, and by 2014 the early maintainers had moved on. I had been using Mongoose since 2012, so I jumped on the opportunity to work on the project as fast as I could. I've been primarily responsible for Mongoose for most of its 10 years of existence.

This eBook contains the distilled experience of 8 years working with MongoDB and Mongoose, and 6 years working on Mongoose. The goal of this book is to take you from someone who is familiar with Node.js and MongoDB, to someone who can architect a Mongoose-based backend for a large scale business. Here are the guiding principles for this eBook:

Before we get started, here's some technical details.

# symbol as a convenient shorthand for .prototype.. For example, Document#save() refers to the save() method on instances of the Document class.Mongoose is a powerful tool for building backend applications. Mongoose provides change tracking and schema validation out of the box, letting you work with a schema-less database without worrying about malformed data. Mongoose also provides tools for customizing your database interactions, like middleware and plugins. Mongoose's community also supports a wide variety of plugins for everything from pagination to encryption to custom types.

Are you ready to become a Mongoose master? Let's get started!

Mongoose is a object-document mapping (ODM) framework for Node.js and MongoDB. This definition is nuanced, so here's an overview of the core benefits of using Mongoose.

Mongoose is built on top of the official MongoDB Node.js Driver, which this book will refer to as just "the driver". The driver is responsible for maintaining a connection to MongoDB and serializing commands into MongoDB's wire protocol. The driver is an rapidly growing project and it is entirely possible to build an application without Mongoose. The major benefits of using Mongoose over using the driver directly are:

save(), you can put a single log statement in pre('save').In order to clarify what Mongoose is, let's take a look at how Mongoose differs from other modules you may have used.

Mongoose is an ODM, not an ORM (object-relational mapping). Some common ORMs are ActiveRecord, Hibernate, and Sequelize. Here's the key difference between ORMs and ODMs: ORMs store data in relational databases, so data from one ORM object may end up in multiple rows in multiple tables. In other words, how your data looks in JavaScript may be completely different from how your ORM stores the data.

MongoDB allows storing arbitrary objects, so Mongoose doesn't need to slice your data into different collections. Like an ORM, Mongoose validates your data, provides a middleware framework, and transforms vanilla JavaScript operations into database operations. But unlike an ORM, Mongoose ensures your objects have the same structure in Node.js as they do when they're stored in MongoDB.

Mongoose is more a framework than a library, although it has elements of both. For the purposes of this book, a framework is a module that executes your code for you, whereas a library is a module that your code calls. Modules that are either a pure framework or a pure library are rare. But, for example, Lodash is a library, Mocha is a framework, and Mongoose is a bit of both.

A database driver, like the MongoDB Node.js driver, is a library. It exposes several functions that you can call, but it doesn't provide middleware or any other paradigm for structuring code that uses the library. On the other hand, Mongoose provides middleware, custom validators, custom types, and other paradigms for code organization.

The MongoDB driver doesn't prescribe any specific architecture. You can build your project using traditional MVC architecture, aspect-oriented, reactive extensions, or anything else. Mongoose, on the other hand, is designed to fit the "model" portion of MVC or MVVM.

Mongoose is an ODM for Node.js and MongoDB. It provides schema validation, change tracking, middleware, and plugins. Mongoose also makes it easy to build apps using MVC patterns. Next, let's take a look at some basic Mongoose patterns that almost all Mongoose apps share.

To use Mongoose, you need to open at least one connection to a MongoDB server, replica set, or sharded cluster.

This book will assume you already have a MongoDB instance running. If you don't have MongoDB set up yet, the easiest way to get started is a cloud instance using MongoDB Atlas' free tier. If you prefer a local MongoDB instance, run-rs is an npm module that automatically installs and runs MongoDB for you.

Here's how you connect Mongoose to a MongoDB instance running on your local machine on the default port.

const mongoose = require('mongoose');

mongoose.connect('mongodb://localhost:27017/mydb');

The first parameter to mongoose.connect() is known as the connection string.

The connection string defines which MongoDB server(s) you're connecting to and

the name of the database you want to use. More sophisticated connection strings

can also include authentication information and configuration options.

The mydb section of the connection string tells Mongoose which database to

use. MongoDB stores data in the form of documents. A document is essentially

a Node.js object, and analagous to a row in SQL databases. Every document

belongs to a collection, and every collection belongs to a database. For the

purposes of this book, you can think of a database as a set of collections.

Although connecting to MongoDB is an asynchronous operation, you don't have to

wait for connecting to succeed before using Mongoose. You can, and generally

should, await on mongoose.connect() to ensure Mongoose connects successfully.

await mongoose.connect('mongodb://localhost:27017/mydb');

However, many Mongoose apps do not wait for Mongoose to connect because it

isn't strictly necessary. Don't be surprised if you see mongoose.connect()

with no await or then().

To store and query MongoDB documents using Mongoose, you need to define a model. Mongoose models are the primary tool you'll use for creating and loading documents. To define a model, you first need to define a schema.

In general, Mongoose schemas are objects that configure models. In particular, schemas are responsible for defining what properties your documents can have and what types those properties must be.

For example, suppose you're creating a model to represent a product. A minimal

product should have a string property name and a number property price as

shown below.

const productSchema = new mongoose.Schema({

// A product has two properties: `name` and `price`

name: String,

price: Number

});

// The `mongoose.model()` function has 2 required parameters:

// The 1st param is the model's name, a string

// The 2nd param is the schema

const Product = mongoose.model('Product', productSchema);

const product = new Product({

name: 'iPhone',

price: '800', // Note that this is a string, not a number

notInSchema: 'foo'

});

product.name; // 'iPhone'

product.price; // 800, Mongoose converted this to a number

// undefined, Mongoose removes props that aren't in the schema

product.notInSchema;

The mongoose.model() function takes the model's name and schema as parameters,

and returns a class. That class is configured to cast, validate, and track

changes on the paths defined in the schema. Schemas have numerous features

beyond just type checking. For example, you can make Mongoose lowercase the

product's name as shown below.

const productSchema = new mongoose.Schema({

// The below means the `name` property should be a string

// with an option `lowercase` set to `true`.

name: { type: String, lowercase: true },

price: Number

});

const Product = mongoose.model('Product', productSchema);

const product = new Product({ name: 'iPhone', price: 800 });

product.name; // 'iphone', lowercased

Internally, when you instantiate

a Mongoose model, Mongoose defines

native JavaScript getters and setters

for every path in your schema on every instance of that model. Here's an

example of a simplified Product class implemented using ES6 classes

that mimics how a Mongoose model works.

class Product {

constructor(obj) {

// `_doc` stores the raw data, bypassing getters and setters

// Otherwise `doc.name = 'foo'` causes infinite recursion

this._doc = {};

Object.assign(this, { name: obj.name, price: obj.price });

}

get name() { return this._doc.name; }

set name(v) { this._doc.name = v == null ? v : '' + v; }

get price() { return this._doc.price; }

set price(v) { this._doc.price = v == null ? v : +v; }

}

const p = new Product({ name: 'iPhone', price: '800', foo: 'bar' });

p.name; // 'iPhone'

p.price; // 800

p.foo; // undefined

In Mongoose, a model is a class, and an instance of a model is called a document. This book also defined a "document" as an object stored in a MongoDB collection in section 1.2. These two definitions are equivalent for practical purposes, because a Mongoose document in Node.js maps one-to-one to a document stored on the MongoDB server.

There are two ways to create a Mongoose document: you can instantiate a model to create a new document, or you can execute a query to load an existing document from the MongoDB server.

To create a new document, you can use your model as a constructor. This creates

a new document that has not been stored in MongoDB yet. Documents

have a save() function that you use to persist changes to MongoDB. When you

create a new document, the save() function sends an insertOne() operation

to the MongoDB server.

// `doc` is just in Node.js, you haven't persisted it to MongoDB yet

const doc = new Product({ name: 'iPhone', price: 800 });

// Store `doc` in MongoDB

await doc.save();

That's how you create a new document. To load an existing document from MongoDB,

you can use the Model.find() or Model.findOne() functions. There are several

other query functions you can use that you'll learn about in Chapter 3.

// `Product.findOne()` returns a Mongoose _query_. Queries are

// thenable, which means you can `await` on them.

let product = await Product.findOne();

product.name; // "iPhone"

product.price; // 800

const products = await Product.find();

product = products[0];

product.name; // "iPhone"

product.price; // 800

Connecting to MongoDB, defining a schema, creating a model, creating documents, and loading documents are the basic patterns that you'll see in almost every Mongoose app. Now that you've seen the verbs describing what actions you can do with Mongoose, let's define the nouns that are responsible for these actions.

So far, you've seen the basic patterns of working with Mongoose: connecting to MongoDB, defining a schema, creating a model, creating a document, and storing a document. In order to do this, Mongoose has 5 core concepts. Let's take a look at these 5 concepts and how they interact.

Mongoose has 5 core concepts that every other concept in the framework builds on. The 5 concepts are:

save() a document to MongoDB.Here's an example of the 5 core concepts in action:

const mongoose = require('mongoose');

// Here's how you create a connection:

const conn = mongoose.createConnection();

conn instanceof mongoose.Connection; // true

// Here's how you create a schema

const schema = new mongoose.Schema({ name: String });

// Calling `conn.model()` creates a new model. In this book

// a "model" is a class that extends from `mongoose.Model`

const MyModel = conn.model('ModelName', schema);

Object.getPrototypeOf(MyModel) === mongoose.Model; // true

// You shouldn't instantiate the `Document` class directly.

// You should use a model instead.

const document = new MyModel();

document instanceof MyModel; // true

document instanceof mongoose.Document; // true

const query = MyModel.find();

query instanceof mongoose.Query; // true

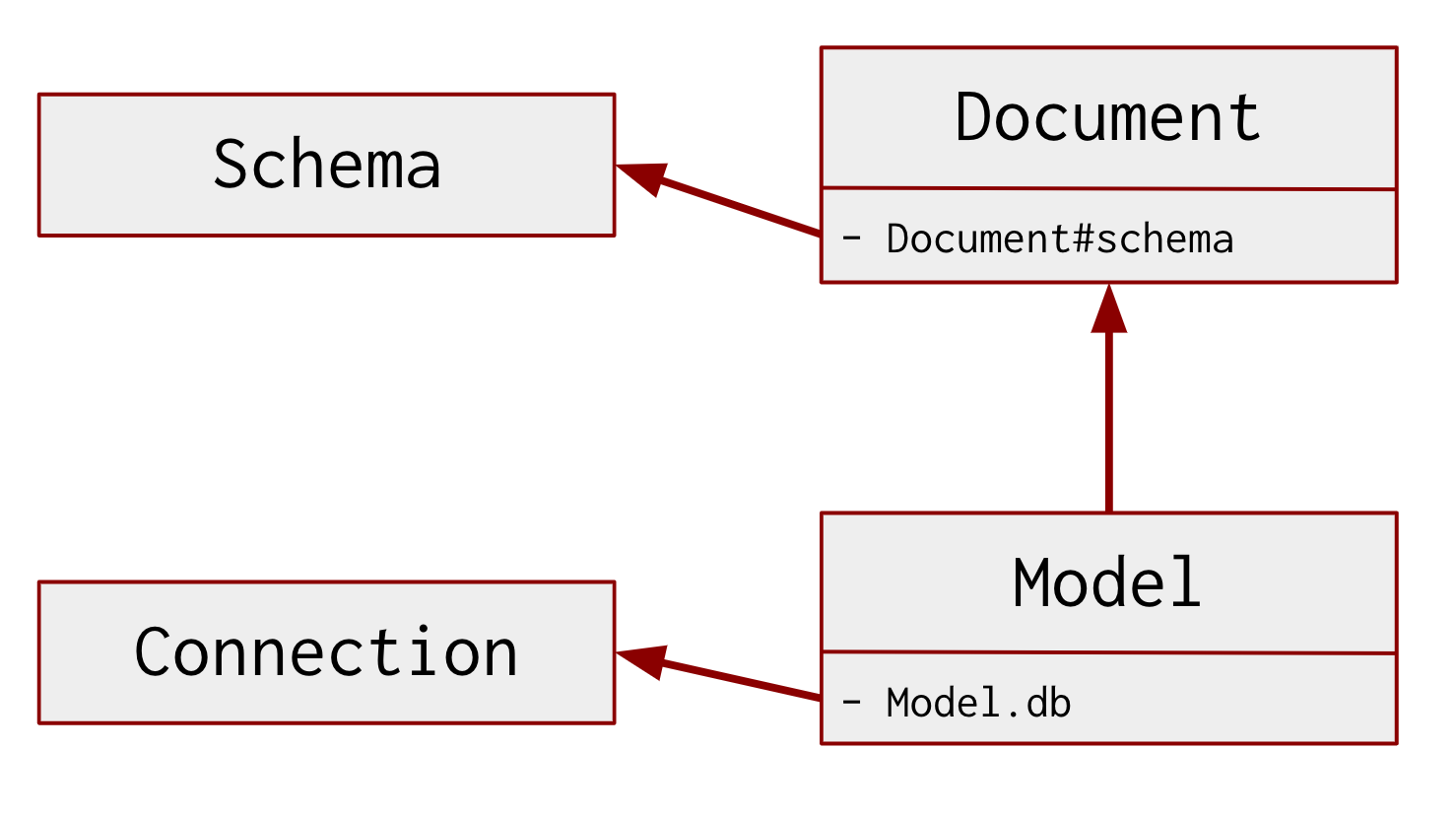

Models are the primary concept you will use to interact with

MongoDB using Mongoose. In order to create a model you

need a connection and a schema. A schema configures your model,

and a connection provides your model a low-level database

interface. Below is a class diagram representing how the

Document, Model, Connection, and Schema classes are

related.

Under the hood, Mongoose's Model class inherits from the

Document class. When you create a new model using mongoose.model(),

you create a new class that inherits from Model. However, for

convenience, Mongoose developers typically use the term "model" to refer to

classes that inherit from Model, and the term "document"

to refer to instances of models.

There's two ways to create a model in Mongoose. You can call the

top-level mongoose.model() function, which takes 2 parameters: a model name

and a schema.

const mongoose = require('mongoose');

mongoose.model('MyModel', new mongoose.Schema({}));

You can also create a new connection and call the connection's

model() function:

const conn = mongoose.createConnection();

conn.model('MyModel', new mongoose.Schema({}));

Remember that a model needs both a connection and a schema.

Mongoose has a default connection mongoose.connection. That is

the connection mongoose.model() uses.

// `mongoose.model()` uses the default connection

mongoose.model('MyModel', schema);

// So the below function call does the same thing

mongoose.connection.model('MyModel', schema);

Note that the return value of mongoose.model() is not an instance of the

Model class. Rather, mongoose.model() returns a class that extends from

Model.

const MyModel = mongoose.model('MyModel', new mongoose.Schema({}));

// `MyModel` is a class that extends from `mongoose.Model`, _not_

// an instance of `mongoose.Model`.

MyModel instanceof mongoose.Model; // false

Object.getPrototypeOf(MyModel) === mongoose.Model; // true

Because Document and Model are distinct classes, some functions are

documented under the Document class and some under the Model class. For

example, save() is defined on the Model class, not the

Document class. Keep this in mind when reading Mongoose documentation.

Mongoose documents are responsible for casting, validating, and tracking changes on the paths defined in your schema.

Casting means converting values to the types defined in your schema. Mongoose

converts arbitrary values to the correct type, or tracks a CastError if it

failed to cast the specified value. Calling save() will fail if there was a

CastError.

const schema = Schema({ name: String, age: Number });

const MyModel = mongoose.model('MyModel', schema);

const doc = new MyModel({});

doc.age = 'not a number';

// Throws CastError: Cast to Number failed for value

// "not a number" at path "age"

await doc.save();

If casting fails, Mongoose will not overwrite the current value of the path.

In the below example, doc.age will still be 59 after trying to assign

'not a number'.

const schema = Schema({ name: String, age: Number });

const Person = mongoose.model('Person', schema);

const doc = new Person({ name: 'Jean-Luc Picard', age: 59 });

doc.age = 'not a number';

doc.age; // 59

You can clear the cast error by setting age to a value Mongoose can cast to

a number. In the below example, Mongoose will successfully save the document,

because setting age to a number clears the cast error.

const doc = new Person({ name: 'Jean-Luc Picard', age: 59 });

doc.age = 'not a number';

doc.age; // 59

doc.age = '12';

doc.age; // 12 as a number

// Saving succeeds, because Mongoose successfully converted the

// string '12' to a number and cleared the previous CastError

await doc.save();

By default, Mongoose casting considers null and undefined

(collectively known as nullish values) as valid values for any type.

For example, you can set name and age to nullish values and save the

document.

doc.age = null;

doc.name = undefined;

// Succeeds, because Mongoose casting lets `null` and `undefined`.

// `name` and `age` will _both_ be `null` in the database.

await doc.save();

Note: Even though name is set to undefined, Mongoose will store it as

null in the database. MongoDB does support storing undefined, but the

MongoDB Node.js driver does not support undefined because the undefined

type has been deprecated for a decade, see

issue mongodb/js-bson#134 on GitHub.

If you want to disallow null values for a path, you need to use validation.

Validation is a separate concept, although closely related to casting. Casting converts a value to the correct type. After Mongoose casts the value successfully, validation lets you further restrict what values are valid. However, validation can't transform the value being validated, whereas casting may change the value.

When you call save(), Mongoose runs validation on

successfully casted values, and reports a ValidatorError if validation fails.

const schema = new mongoose.Schema({

name: {

type: String,

// `enum` adds a validator to `name`. The built-in `enum`

// validator ensures `name` will be one of the below values.

enum: ['Jean-Luc Picard', 'William Riker', 'Deanna Troi']

}

});

const Person = mongoose.model('Person', schema);

const doc = new Person({ name: 'Kathryn Janeway' });

await doc.save().catch(error => {

// ValidationError: Person validation failed: name:

// `Kathryn Janeway` is not a valid enum value for path `name`.

error;

// ValidatorError: `Kathryn Janeway` is not a valid enum

// value for path `name`.

error.errors['name'];

Object.keys(error.errors); // ['name']

});

If validate() fails, Mongoose rejects the promise with a ValidationError.

The ValidationError has an errors property that contains a hash mapping

errored paths to the corresponding ValidatorError or CastError. In other

words, a ValidationError can contain multiple ValidatorError objects and/or

CastError objects.

Mongoose documents have a validate() function that you can use to manually

run validation. The save() function calls validate() internally, so as a

Mongoose user you are not responsible for running validation yourself. However,

you may run validation on your own using validate(). Just remember:

the validate() function is asynchronous, so you need to await on it.

Mongoose has numerous built-in validators, but built-in validators only apply

to certain types. For example, adding enum to a path of type Number is a

no-op.

const schema = new mongoose.Schema({

age: {

type: Number,

enum: [59, 60, 61]

}

});

const Person = mongoose.model('Person', schema);

const doc = new Person({ age: 22 });

// No error, `enum` does nothing for `type: Number`

await doc.validate();

Here's a list of all the built-in validators Mongoose supports for different types:

enum, match, minlength, maxlengthmin, maxmin, maxThere is one built-in validator that applies to all schema types: the required

validator. The required validator disallows nullish values.

const schema = new mongoose.Schema({

name: {

type: String,

// Adds the `required` validator to the `name` path

required: true

}

});

const Person = mongoose.model('Person', schema);

const doc = new Person({ name: null });

await doc.validate().catch(error => {

// ValidationError: Person validation failed: name: Path

// `name` is required.

error;

// ValidatorError: Path `name` is required.

error.errors['name'];

});

Mongoose documents have change tracking. That means Mongoose tracks vanilla

JavaScript assignments, like doc.name = 'foo' or

Object.assign(doc, { name: 'foo' }), and converts them into MongoDB update

operators. When you call save(), Mongoose sends your changes to MongoDB.

const MyModel = mongoose.model('MyModel', mongoose.Schema({

name: String,

age: Number

}));

const doc = new MyModel({});

doc.name = 'Jean Valjean';

doc.age = 27;

await doc.save(); // Persist `doc` to MongoDB

When you load a document from the database using a query, change tracking means Mongoose can determine the minimal update to send to MongoDB and avoid wasting network bandwidth.

// Mongoose loads the document from MongoDB and then _hydrates_ it

// into a full Mongoose document.

const doc = await MyModel.findOne();

doc.name; // "Jean Valjean"

doc.name = 'Monsieur Leblanc';

doc.modifiedPaths(); // ['name']

// `save()` only sends updated paths to MongoDB. Mongoose doesn't

// send `age`.

await doc.save();

A Mongoose document is an instance of a Mongoose model. Documents track changes,

so you can modify documents using vanilla JavaScript operations and rely on

Mongoose to send a minimal update to MongoDB when you save(). Mongoose does

more with change tracking than just structuring MongoDB updates: it also

makes sure your JavaScript assignments conform to a schema. Let's take a closer

look at schemas.

In Mongoose, a schema is a configuration object for a model. A schema's primary responsibility is to define the paths that the model will cast, validate, and track changes on.

Let's take a closer look at the schema from example 2.2.1. This schema has

two explicitly defined paths: name and age. Mongoose also adds an _id

path to schemas by default. You can use the Schema#path() function to get

the SchemaType instance associated with a given path as shown below.

const schema = Schema({ name: String, age: Number });

Object.keys(schema.paths); // ['name', 'age', '_id']

schema.path('name'); // SchemaString { path: 'name', ... }

schema.path('name') instanceof mongoose.SchemaType; // true

schema.path('age'); // SchemaNumber { path: 'age', ... }

schema.path('age') instanceof mongoose.SchemaType; // true

// The `SchemaString` class inherits from `SchemaType`.

schema.path('name') instanceof mongoose.Schema.Types.String; // true

A Mongoose SchemaType, like the SchemaString class, does not contain

an actual value. There is no string value associated with an instance of

Schema.Types.String. A SchemaType instance is a configuration object

for an individual path: it describes what casting, validation, and other

Mongoose features should apply to a given path.

Many Mongoose developers use the terms "schema" and "model" interchangeably. Don't do this! A schema configures a model, but a schema instance can't write to the database. Similarly, a document is not an instance of a schema. Documents are instances of models, and models have an associated schema. In object-oriented programming terminology, a model has a schema, and a model is a document.

So far, the only SchemaType features you've seen are casting and validation. In the next section, you'll learn about another SchemaType feature: getters and setters.

In Mongoose, getters/setters allow you to execute custom logic when getting or setting a property on a document. Getters are useful for converting data that's stored in MongoDB into a more human-friendly form, and setters are useful for converting user data into a standardized format for storage in MongoDB. First, let's take a look at getters.

Mongoose executes your custom getter when you access a property. In the below

example, the string path email has a custom getter that replaces '@' with

' [at] '.

const schema = new mongoose.Schema({ email: String });

// `v` is the underlying value stored in the database

schema.path('email').get(v => v.replace('@', ' [at] '));

const Model = mongoose.model('User', schema);

const doc = new Model({ email: 'test@gmail.com' });

doc.email; // 'test [at] gmail.com'

doc.get('email'); // 'test [at] gmail.com'

Keep in mind that getters do not impact the underlying data stored in

MongoDB. If you save doc, the email property will be 'test@gmail.com' in

the database. Mongoose applies the getter every time you access the property.

In the above example, Mongoose runs the getter function twice, once for

doc.email and once for doc.get('email').

Another useful feature of getters is that Mongoose applies getters when

converting a document to JSON, including when you call

Express' res.json() function

with a Mongoose document. This makes getters an excellent tool for formatting

MongoDB data in RESTful APIs.

app.get(function(req, res) {

return Model.findOne().

// The `email` getter will run here

then(doc => res.json(doc)).

catch(err => res.status(500).json({ message: err.message }));

});

To disable running getters when converting a document to JSON, set the

toJSON.getters option to false in your schema

as shown below.

const userSchema = new Schema({

email: { type: String, get: obfuscate }

}, { toJSON: { getters: false } });

You can also skip running getters on a one-off basis using the

Document#get() function's getters option.

// Make sure you don't forget to pass `null` as the 2nd parameter.

doc.get('email', null, { getters: false }); // 'test@gmail.com'

Mongoose executes custom setters when you assign a value to a property. Suppose

you want to ensure all user emails are lowercased, so users don't have to worry

about case when logging in. The below userSchema adds a custom setter that

lowercases emails.

const userSchema = Schema({ email: String });

userSchema.path('email').set(v => v.toLowerCase());

const User = mongoose.model('User', userSchema);

const user = new User({ email: 'TEST@gmail.com' });

user.email; // 'test@gmail.com'

Mongoose will call the custom setter regardless of which syntax you use to set

the email property.

doc.email = 'TEST@gmail.com'doc.set('email', 'TEST@gmail.com')Object.assign(doc, { email: 'TEST@gmail.com' })Mongoose also runs setters on update operations:

updateOne()updateMany()findOneAndUpdate()findOneAndReplace()For example, Mongoose will run the toLowerCase() setter in the below example,

because the below example calls updateOne().

const { _id } = await User.create({ email: 'test@gmail.com' });

// Mongoose will run the `toLowerCase()` setter on `email`

await User.updateOne({ _id }, { email: 'NEW@gmail.com' });

const doc = await User.findOne({ _id });

doc.email; // 'new@gmail.com'

Getters and setters are good for more than just manipulating strings. You can

also use getters and setters to transform values to and from different types.

For example, MongoDB ObjectIds are objects that are commonly represented as

strings that look like '5d124083fc741d44eca250fd'. However, you cannot compare

ObjectIds using JavaScript's === operator, or even the == operator.

const str = '5d124083fc741d44eca250fd';

const schema = Schema({ objectid: mongoose.ObjectId });

const Model = mongoose.model('ObjectIdTest', schema);

const doc1 = new Model({ objectid: str });

const doc2 = new Model({ objectid: str });

// Mongoose casted the string `str` to an ObjectId

typeof doc1.objectid; // 'object'

doc1.objectid instanceof mongoose.Types.ObjectId; // true

doc1.objectid === doc2.objectid; // false

doc1.objectid == doc2.objectid; // false

However, you can use a custom getter that converts the objectid property

into a string for comparison purposes. This approach also works for deep

equality checks like assert.deepEqual().

const str = '5d124083fc741d44eca250fd';

const s = Schema({ objectid: mongoose.ObjectId }, { _id: false });

// Add a custom getter that converts ObjectId values to strings

s.path('objectid').get(v => v.toString());

const Model = mongoose.model('ObjectIdTest', s);

const doc1 = new Model({ objectid: str });

const doc2 = new Model({ objectid: str });

// Mongoose now converts `objectid` to a string for you

typeof doc1.objectid; // 'string'

// The raw value stored in MongoDB is still an ObjectId

typeof doc1.get('objectid', null, { getters: false }); // 'object'

doc1.objectid === doc2.objectid; // true

doc1.objectid == doc2.objectid; // true

assert.deepEqual(doc1.toObject(), doc2.toObject()); // passes

Mongoose casting converts strings to ObjectIds for you, so in the above example you don't need to write a custom setter. However, if you use a getter to convert the raw value to a different type, you shoud also write a setter that converts back to the correct type.

For example, MongoDB introduced support for decimal floating points in MongoDB

3.4. Mongoose has a Decimal128 type for storing values as decimal floating

points. Below is an example of using Decimal128 with Mongoose.

const accountSchema = Schema({ balance: mongoose.Decimal128 });

const Account = mongoose.model('Account', accountSchema);

await Account.create({ balance: 0.1 });

await Account.updateOne({}, { $inc: { balance: 0.2 } });

const account = await Account.findOne();

account.balance.toString(); // 0.3

// The MongoDB Node driver currently doesn't support

// addition and subtraction with Decimals

account.balance + 0.5;

Currently, you can't use add or subtract native JavaScript numbers with MongoDB decimals. Luckily, you can use getters and setters to make MongoDB decimals look like numbers as far as JavaScript is concerned.

const accountSchema = Schema({

balance: {

type: mongoose.Decimal128,

// Convert the raw decimal value to a native JS number

// when accessing the `balance` property

get: v => parseFloat(v.toString()),

// When setting the `balance` property, round to 2

// decimal places and convert to a MongoDB decimal

set: v => mongoose.Types.Decimal128.fromString(v.toFixed(2))

}

});

const Account = mongoose.model('Account', accountSchema);

const account = new Account({ balance: 0.1 });

account.balance += 0.2;

account.balance; // 0.3

Many codebases use setters to handle computed properties, properties that are computed from other properties in the document. For example, suppose you want to store a user's email domain (for example, "gmail.com") in addition to the user's email.

const userSchema = Schema({ email: String, domain: String });

// Whenever you set `email`, make sure you update `domain`.

userSchema.path('email').set(function(v) {

// In a setter function, `this` may refer to a document or a query.

// If `this` is a document, you can modify other properties.

this.domain = v.slice(v.indexOf('@') + 1);

return v;

});

const User = mongoose.model('User', userSchema);

const user = new User({ email: 'test@gmail.com' });

user.email; // 'test@gmail.com'

user.domain; // 'gmail.com'

Using setters to modify other properties within the document is viable, but you should avoid doing so unless there's no other option. We include it in this book because using setters for computed properties is a common pattern in practice and you may see it when working on an existing codebase. However, we recommend using virtuals or middleware instead.

What can go wrong with computed property setters? First, in userSchema,

the domain property is a normal property that you can set. A malicious

user or a bug could cause an incorrect domain.

// Since `domain` is a real property, you can `set()` it and

// overwrite the computed value.

let user = new User({ email: 'test@gmail.com', domain: 'oops' });

user.domain; // 'oops'

// Setters are order dependent. If `domain` is the first key,

// the setter will actually work.

user = new User({ domain: 'oops', email: 'test@gmail.com' });

user.domain; // 'gmail.com'

This means using setters for computed properties is unpredictable. Another

common issue is that setters also run on updateOne() and updateMany()

operations. If your setter modifies other properties in the document, you

need to be careful to ensure it supports both queries and documents.

Queries have a set() function to make this task easier.

const userSchema = Schema({ email: String, domain: String });

userSchema.path('email').set(function setter(v) {

const domain = v.slice(v.indexOf('@') + 1);

// Queries and documents both have a `set()` function

this.set({ domain });

return v;

});

const User = mongoose.model('User', userSchema);

let doc = await User.create({ email: 'test@gmail.com' });

doc.domain; // 'gmail.com'

// The setter will also run on `updateOne()`

const { _id } = doc;

const $set = { email: 'test@test.com' };

await User.updateOne({ _id }, { $set });

doc = await User.findOne({ _id });

doc.domain; // 'test.com'

You can handle computed properties with getters and setters, but virtuals are typically the better choice. In Mongoose, a virtual is a property that is not stored in MongoDB. Virtuals can have getters and setters just like normal properties. Virtuals have some neat properties that make them ideal for expressing the notion of a property that is a function of other properties.

Consider the previous example of a domain property that stores everything

after the '@' in the user's email. Instead of a custom setter that updates

the domain property every time the email property is modified, you can

create a virtual property domain. The virtual property domain will have a

getter that recomputes the user's email domain every time the property is

accessed.

const userSchema = Schema({ email: String });

userSchema.virtual('domain').get(function() {

return this.email.slice(this.email.indexOf('@') + 1);

});

const User = mongoose.model('User', userSchema);

let doc = await User.create({ email: 'test@gmail.com' });

doc.domain; // 'gmail.com'

// Mongoose ignores setting virtuals that don't have a setter

doc.set({ email: 'test@test.com', domain: 'foo' });

doc.domain; // 'test.com

Note that, even though the above code tries to set() the domain property

to an incorrect value, domain is still correct. Setting a virtual property

without a setter is a no-op. This virtual-based userSchema can safely accept

untrusted data without risk of corrupting domain.

The downside of virtuals is that, since they are not stored in MongoDB, you

cannot query documents by virtual properties. If you try to query by

a virtual property like domain, you won't get any results. That's because

MongoDB doesn't know about domain, domain is a property that Mongoose

computes in Node.js.

await User.create({ email: 'test@gmail.com' });

// `doc` will be null, because the document in the database

// does **not** have a `domain` property

const doc = await User.findOne({ domain: 'gmail.com' });

JSON.stringify()By default, Mongoose does not include virtuals when you convert a document to

JSON. For example, if you pass a document to the Express web framework's

res.json() function, virtuals will not be included by default.

You need to set the toJSON schema option to { virtuals: true } to opt in to

including virtuals in your document's JSON representation.

// Opt in to Mongoose putting virtuals in `toJSON()` output

// for `JSON.stringify()`, and in `toObject()` output.

const opts = { toJSON: { virtuals: true } };

const userSchema = Schema({ email: String }, opts);

userSchema.virtual('domain').get(function() {

return this.email.slice(this.email.indexOf('@') + 1);

});

const User = mongoose.model('User', userSchema);

const doc = await User.create({ email: 'test@gmail.com' });

// { _id: ..., email: 'test@gmail.com', domain: 'gmail.com' }

doc.toJSON();

// {"_id":...,"email":"test@gmail.com","domain":"gmail.com"}

JSON.stringify(doc);

Under the hood, the toJSON schema option configures the Document#toJSON()

function. JavaScript's native JSON.stringify() function looks for toJSON()

functions, and replaces the object being stringified with the result of

toJSON(). In the below example, JSON.stringify() serializes the value of

prop as the number 42, even though prop is an object in JavaScript.

const myObject = {

toJSON: function() {

// Will print once when you call `JSON.stringify()`.

console.log('Called!');

return 42;

}

};

// `{"prop":42}`. That is because `JSON.stringify()` uses the result

// of the `toJSON()` function.

JSON.stringify({ prop: myObject });

You can also configure the toJSON schema option globally:

mongoose.set('toJSON', { virtuals: true });

So far, this book has only used Model.findOne() and Model.updateOne() as

promises using the await keyword.

const doc = await MyModel.findOne();

When you call Model.findOne(), Mongoose does not return a promise. It

returns an instance of the Mongoose Query class, which can be used as a promise.

const query = Model.findOne();

query instanceof mongoose.Query; // true

// Each model has its own subclass of the `mongoose.Query` class

query instanceof Model.Query; // true

const doc = await query; // Executes the query

The reason why Mongoose has a special Query class rather than just returning

a promise from Model.findOne() is for chaining. You can construct a query

by chaining query helper methods like where() and equals().

// Equivalent to `await Model.findOne({ name: 'Jean-Luc Picard' })`

const doc = await Model.findOne().

where('name').equals('Jean-Luc Picard');

Each query has a op property, which contains the operation

Mongoose will send to the MongoDB server. For example, when you call

Model.findOne(), Mongoose sets op to 'findOne'.

const query = Model.findOne();

query.op; // 'findOne'

Below are the possible values for op. There's a corresponding method on the

Query class for each of the below values of op.

findfindOnefindOneAndDeletefindOneAndUpdatefindOneAndReplacedeleteOnedeleteManyreplaceOneupdateOneupdateManyYou can modify the op property before executing the query. Until you execute

the query, you can change the op by calling any of the above methods. For example,

some projects chain .find().updateOne() as shown below, because separating out

the query filter and the update operation may make your code more readable.

// Equivalent to `Model.updateOne({ name: 'Jean-Luc Picard' },

// { $set: { age: 59 } })`

const query = Model.

find({ name: 'Jean-Luc Picard' }).

updateOne({}, { $set: { age: 59 } });

Mongoose does not automatically execute a query, you need to explicitly

execute the query. There's two methods that let you execute a query: exec()

and then(). When you await on a query, the JavaScript runtime calls then()

under the hood.

// The `exec()` function executes the query and returns a promise.

const promise1 = Model.findOne().exec();

// The `then()` function calls `exec()` and returns a promise

const promise2 = Model.findOne().then(doc => doc);

// Equivalently, `await` calls `then()` under the hood

const doc = await Model.findOne();

When you require('mongoose'), you get an instance of the Mongoose class.

const mongoose = require('mongoose');

// Mongoose exports an instance of the `Mongoose` class

mongoose instanceof mongoose.Mongoose; // true

When someone refers to the "Mongoose global" or "Mongoose singleton", they're

referring to the object you get when you require('mongoose'). However, you

can also instantiate an entirely separate Mongoose instance with its own

options, models, and connections.

const { Mongoose } = require('mongoose');

const mongoose1 = new Mongoose();

const mongoose2 = new Mongoose();

mongoose1.set('toJSON', { virtuals: true });

mongoose1.get('toJSON'); // { virtuals: true }

mongoose2.get('toJSON'); // null

In particular, the Mongoose global has a default connection that is an

instance of the mongoose.Connection class. When you call mongoose.connect(),

that is equivalent to calling mongoose.connection.openUri().

const mongoose = require('mongoose');

const mongoose1 = new mongoose.Mongoose();

const mongoose2 = new mongoose.Mongoose();

mongoose1.connection instanceof mongoose.Connection; // true

mongoose2.connection instanceof mongoose.Connection; // true

mongoose1.connections.length; // 1

mongoose1.connections[0] === mongoose1.connection; // true

mongoose1.connection.readyState; // 0, 'disconnected'

mongoose1.connect('mongodb://localhost:27017/test',

{ useNewUrlParser: true });

mongoose1.connection.readyState; // 2, 'connecting'

When you call mongoose.createConnection(), you create a new connection

object that Mongoose tracks in the mongoose.connections property.

const mongoose = require('mongoose');

const mongoose1 = new mongoose.Mongoose();

const conn = mongoose1.createConnection('mongodb://localhost:27017/test',

{ useNewUrlParser: true });

mongoose1.connections.length; // 2

mongoose1.connections[1] === conn; // true

Why do you need multiple connections? For most apps, you only need one connection. Here's a couple examples when you would need to create multiple connections:

const mongoose = require('mongoose');

const conn1 = mongoose.createConnection('mongodb://localhost:27017/db1',

{ useNewUrlParser: true });

const conn2 = mongoose.createConnection('mongodb://localhost:27017/db2',

{ useNewUrlParser: true });

// Will store data in the 'db1' database's 'tests' collection

const Model1 = conn1.model('Test', mongoose.Schema({ name: String }));

// Will store data in the 'db2' database's 'tests' collection

const Model2 = conn2.model('Test', mongoose.Schema({ name: String }));

There are 5 core concepts in Mongoose: models, documents, schemas, connections, and queries. Most Mongoose apps will use all 5 of these concepts.

When you create a schema, there are two implicit classes: SchemaTypes and VirtualTypes. You may interact with these directly, but you can build a simple CRUD app without dealing with them.

Finally, when you require('mongoose'), you get back an instance of the Mongoose

class. An instance of the Mongoose class tracks a list of connections, and stores some global options. Advanced users may want to create multiple Mongoose instances,

but most apps will only use the singleton Mongoose instance you get when you

require('mongoose').

Queries and aggregations are how you fetch data from MongoDB into Mongoose. You can also use queries to update data in MongoDB without needing to fetch the documents.

Mongoose models have several static functions, like find() and updateOne(),

that return Mongoose Query objects.

const schema = Schema({ name: String, age: Number });

const Model = mongoose.model('Model', schema);

const query = Model.find({ age: { $lte: 30 } });

query instanceof mongoose.Query; // true

Mongoose queries provide a chainable API for creating and executing CRUD (create, ead, update, delete) operations. Mongoose queries let you build up a CRUD operation in Node.js, and then send the operation to the MongoDB server.

Mongoose queries are just objects in Node.js memory until you actually execute them. There are 3 ways to execute a query:

await on the queryQuery#then()Query#exec()Under the hood, all 3 ways are equivalent. The await keyword calls Query#then(),

and Mongoose's Query#then() calls Query#exec(), so (1) and (2) are

just syntactic sugar for (3).

Here are the 3 ways to execute a query in code form:

const query = Model.findOne();

// Execute the query 3 times, in 3 different ways

await query; // 1

query.then(res => {}); // 2

await query.exec(); // 3

Note that a query can be executed multiple times. The above example executes 3

separate findOne() queries.

The actual operation that Mongoose will execute is stored as a string in

the Query#op property. Below is a list of all valid query ops, grouped

by CRUD verb.

count: return the number of documents that match filter (deprecated)countDocuments: return the number of documents that match filterdistinct: return a list of the distinct values of a given fieldestimatedDocumentCount: return the number of documents in the collectionfind: return a list of documents that match filterfindOne: return the first document that matches filter, or nullfindOneAndReplace: same as replaceOne() + returns the replaced documentfindOneAndUpdate: same as updateOne() + returns the replaced documentreplaceOne: replace the first document that matches filter with replacementupdate: update the first document that matches filter (deprecated)updateMany: update all documents that match filterupdateOne: update the first document that matches filterdeleteOne: delete the first document that matches filterdeleteMany: delete all documents that match filterfindOneAndDelete: same as deleteOne() + returns the deleted documentfindOneAndRemove: same as remove() + returns the deleted document (deprecated)remove: delete all documents that match filter (deprecated)You can modify the query op before executing.

Many developers use .find().updateOne() in order to separate out the query filter

from the update object for readability.

// Chaining makes it easier to visually break up complex updates

await Model.

find({ name: 'Will Riker' }).

updateOne({}, { rank: 'Commander' });

// Equivalent, without using chaining syntax

await Model.updateOne({ name: 'Will Riker' }, { rank: 'Commander' });

Note that .find().updateOne() is not the same thing as .findOneAndUpdate().

findOneAndUpdate() is a distinct operation on the MongoDB server.

Chaining .find().updateOne() ends up as an updateOne().

There are 17 distinct query operations in Mongoose. 4 are deprecated: count(),

update(), findOneAndRemove(), and remove(). The remaining 13 can be

broken up into 5 classes: reads, updates, deletes, find and modify ops, and other.

find(), findOne(), and countDocuments() all take in a filter parameter and

find document(s) that match the filter.

find() returns a list of all documents that match filter. If none are found, find() returns an empty array.findOne() returns the first document that matches filter. If none are found, findOne() returns null.countDocuments() returns the number of documents that match filter. If none are found, countDocuments() returns 0.Below is an example of using find(), findOne(), and countDocuments() with

the same filter.

await Model.insertMany([

{ name: 'Jean-Luc Picard', age: 59 },

{ name: 'Will Riker', age: 29 },

{ name: 'Deanna Troi', age: 29 }

]);

const filter = { age: { $lt: 30 } };

let res = await Model.find(filter);

res[0].name; // 'Will Riker'

res[1].name; // 'Deanna Troi'

res = await Model.findOne(filter);

res.name; // 'Will Riker'

res = await Model.countDocuments(filter);

res; // 2

deleteOne(), deleteMany() , and remove() all take a filter parameter and

delete document(s) from the collection that match the given filter.

deleteOne() deletes the first document that matches filterdeleteMany() deletes all documents that match filterremove() deletes all documents that match filter. remove() is deprecated, you should use deleteOne() or deleteMany() instead.Below is an example of using deleteOne(), deleteMany(), and remove() with

the same filter.

await Model.insertMany([

{ name: 'Jean-Luc Picard', age: 59 },

{ name: 'Will Riker', age: 29 },

{ name: 'Deanna Troi', age: 29 }

]);

const filter = { age: { $lt: 30 } };

let res = await Model.deleteOne(filter);

res.deletedCount; // 1

await Model.create({ name: 'Will Riker', age: 29 });

res = await Model.deleteMany(filter);

res.deletedCount; // 2

await Model.insertMany([

{ name: 'Will Riker', age: 29 },

{ name: 'Deanna Troi', age: 29 }

]);

// `remove()` deletes all docs that match `filter` by default

res = await Model.remove(filter);

res.deletedCount; // 2

The return value of await Model.deleteOne() is not the deleted document or

documents. The return value is a result object that has a deletedCount property,

which tells you the number of documents MongoDB deleted. To get the deleted document,

you'll need to use findOneAndDelete().

updateOne(), updateMany(), replaceOne(), and update() all take in a filter

parameter and an update parameter, and modify documents that match filter based on

update.

updateOne() updates the first document that matches filterupdateMany() updates all documents that match filterreplaceOne() replaces the first document that matches filter with the update parameterupdate() updates the first document that matches filter. update() is deprecated, use updateOne() instead.Below is an example of using updateOne(), updateMany(), and update() with the

same filter and update parameters:

const schema = Schema({ name: String, age: Number, rank: String });

const Model = mongoose.model('Model', schema);

await Model.insertMany([

{ name: 'Jean-Luc Picard', age: 59 },

{ name: 'Will Riker', age: 29 },

{ name: 'Deanna Troi', age: 29 }

]);

const filter = { age: { $lt: 30 } };

const update = { rank: 'Commander' };

// `updateOne()`

let res = await Model.updateOne(filter, update);

res.nModified; // 1

let docs = await Model.find(filter);

docs[0].rank; // 'Commander'

docs[1].rank; // undefined

// `updateMany()`

res = await Model.updateMany(filter, update);

res.nModified; // 2

docs = await Model.find(filter);

docs[0].rank; // 'Commander'

docs[1].rank; // 'Commander'

// `update()` behaves like `updateOne()` by default

res = await Model.update(filter, update);

res.nModified; // 1

The replaceOne() function is slightly different from updateOne()

because it replaces the matched document, meaning that it deletes all keys that

aren't in the update other than _id. In the previous example, updateOne() added

a 'rank' key to the document, but didn't change the other keys in the document.

On the other hand, replaceOne() deletes any keys that aren't in the update.

const filter = { age: { $lt: 30 } };

const replacement = { name: 'Will Riker', rank: 'Commander' };

// Sets `rank`, unsets `age`

let res = await Model.replaceOne(filter, replacement);

res.nModified; // 1

let docs = await Model.find({ name: 'Will Riker' });

!!docs[0]._id; // true

docs[0].name; // 'Will Riker'

docs[0].rank; // 'Commander'

docs[0].age; // undefined

Like deletes, updates do not return the updated document or documents. They

return a result object with an nModified property that contains the number of

documents the MongoDB server updated. To get the updated document, you should

use findOneAndUpdate().

findOneAndUpdate(), findOneAndDelete(), findOneAndReplace(), and findOneAndRemove() are operations that update or remove a single document. Unlike updateOne(), deleteOne(), and replaceOne(), these functions return the

updated document rather than simply the number of updated documents.

findOneAndUpdate() works like updateOne(), but also returns the documentfindOneAndDelete() works like deleteOne(), but also returns the documentfindOneAndReplace() works like replaceOne(), but also returns the documentfindOneAndRemove() works like deleteOne(). It is deprecated in favor of findOneAndDelete().Remember that findOneAndUpdate() is different than calling findOne() followed

by updateOne(). findOneAndUpdate() is a single operation,

and it is atomic.

For example, if you call findOne() followed by an updateOne(),

another update may come in and change the document between when you called

findOne() and when you called updateOne(). With findOneAndUpdate(),

that cannot happen.

By default, findOneAndUpdate(), findOneAndDelete(), and findOneAndReplace()

return the document as it was before the MongoDB server applied the update.

const filter = { name: 'Will Riker' };

const update = { rank: 'Commander' };

// MongoDB will return the matched doc as it was **before**

// applying `update`

let doc = await Model.findOneAndUpdate(filter, update);

doc.name; // 'Will Riker'

doc.rank; // undefined

const replacement = { name: 'Will Riker', rank: 'Commander' };

doc = await Model.findOneAndReplace(filter, replacement);

// `doc` still has an `age` key, because `findOneAndReplace()`

// returns the document as it was before it was replaced.

doc.rank; // 'Commander'

doc.age; // 29

// Delete the doc and return the doc as it was before the

// MongoDB server deleted it.

doc = await Model.findOneAndDelete(filter);

doc.name; // 'Will Riker'

doc.rank; // 'Commander'

doc.age; // undefined

To return the document as it is after the MongoDB server applied the update,

use the new option.

const filter = { name: 'Will Riker' };

const update = { rank: 'Commander' };

const options = { new: true };

// MongoDB will return the matched doc **after** the update

// was applied if you set the `new` option

let doc = await Model.findOneAndUpdate(filter, update, options);

doc.name; // 'Will Riker'

doc.rank; // 'Commander'

const replacement = { name: 'Will Riker', rank: 'Commander' };

doc = await Model.findOneAndReplace(filter, replacement, options);

doc.rank; // 'Commander'

doc.age; // void 0

The distinct() and estimatedDocumentCount() operations don't quite fit in any

of the other classes. Their semantics are sufficiently different that they belong

in their own class.

distinct(key, filter) returns an array of distinct values of key among documents that match filter.// Return an array containing the distinct values of the `age`

// property. The values in the array can be in any order.

let values = await Model.distinct('age');

values.sort(); // [29, 59]

const filter = { age: { $lte: 29 } };

values = await Model.distinct('name', filter);

values.sort(); // ['Deanna Troi', 'Will Riker']

estimatedDocumentCount() returns the number of documents in the collection based on MongoDB's collection metadata. It doesn't take any parameters.// Unlike `countDocuments()`, `estimatedDocumentCount()` does **not**

// take a `filter` parameter. It only returns the number of documents

// in the collection.

const count = await Model.estimatedDocumentCount();

count; // 3

In most cases, calling estimatedDocumentCount() will give the same result as calling

countDocuments() with no parameters. estimatedDocumentCount() is generally faster

than countDocuments() because it doesn't actually go through and count the documents

in the collection. However, estimatedDocumentCount() may be incorrect for one of two reasons:

estimatedDocumentCount() is rarely useful when building apps because it doesn't

accept a filter parameter. You can use estimatedDocumentCount() if you want to

count the total number of documents in a massive collection, but countDocuments()

is usually fast enough.

MongoDB supports a wide variety of operators to build up sophisticated filter

parameters for operations like find() and updateOne().

In the previous section, several examples used the $lte query operator

(also known as a query selector) to filter

for Star Trek characters whose age was less than 30:

// `$lte` is an example of a query selector

const filter = { age: { $lte: 30 } };

const docs = await Model.find(filter);

If an object has multiple query selectors, MongoDB treats it as an "and".

For example, the below query selector will match documents whose age

is greater than 50 and less than 60.

const querySelectors = { $gt: 50, $lt: 60 };

const filter = { age: querySelectors };

The most commonly used query selectors fall into one of 4 classes:

$eq: Matches values that strictly equal the specified value$gt: Matches values that are greater than the specified value$gte: Matches values that are greather than or equal to the specified value$in: Matches values that are strictly equal to a value in the specified array$lt: Matches values that are less than a given value$lte: Matches values that are less than or equal to the specified value$ne: Matches values that are not strictly equal to the specified value$nin: Matches values that are not strictly equal to any of the specified values$regex: Matches values that are strings which match the specified regular expressionThe right hand side of a comparison query selector is the value or values to compare against.

// Matches if the `age` property is exactly equal to 42

let filter = { age: { $eq: 42 } };

// Matches if the `age` property is a number between 30 and 40

filter = { age: { $gte: 30, $lt: 40 } };

// Matches if `name` is 'Jean-Luc Picard' or 'Will Riker'

filter = { name: { $in: ['Jean-Luc Picard', 'Will Riker'] } };

// Matches if `name` is a string containing 'picard', ignoring case

filter = { name: { $regex: /picard/i } };

There are two 'element' query selectors. These query selectors help you filter documents based on the type of the value, like whether a value is a string or a number.

$exists: Matches either documents that have a certain property, or don't have the specified property, based on whether the given value is true or false$type: Matches documents where the property has the specified type.Here's how $exists works:

const schema = Schema({ name: String, age: Number, rank: String });

const Model = mongoose.model('Model', schema);

await Model.create({ name: 'Will Riker', age: 29 });

// Finds the doc, because there's an `age` property

let doc = await Model.findOne({ age: { $exists: true } });

// Does **not** find the doc, no `rank` property

doc = await Model.findOne({ rank: { $exists: true } });

// Finds the doc, because there's no `rank` property

doc = await Model.findOne({ rank: { $exists: false } });

// Finds the doc, because `$exists: true` matches `null` values.

// `$exists` is analagous to a JavaScript `in` or `hasOwnProperty()`

await Model.updateOne({}, { rank: null });

doc = await Model.findOne({ rank: { $exists: true } });

$exists is useful, but it also matches null values. The $type query

selector is helpful for cases where you want to match documents that are the

wrong type.

const schema = Schema({ name: String, age: Number, rank: String });

const Model = mongoose.model('Model', schema);

await Model.create({ name: 'Will Riker', age: 29 });

// Finds the doc, because `age` is a number

let doc = await Model.findOne({ age: { $type: 'number' } });

// Does **not** find the doc, because the doc doesn't

// have a `rank` property.

doc = await Model.findOne({ rank: { $type: 'string' } });

Below is a list of valid types for the $type query selector:

'double''string''object''array''binData''objectId''bool''null''regex''int''timestamp''long''decimal''number': Alias that matches 'double', 'int', 'long', and 'decimal'There is a small gotcha when using $type with Mongoose numbers: to save space,

the underlying MongoDB driver stores JavaScript numbers as 'int' where possible,

and 'double' otherwise. So if age is an integer, Mongoose will store it as an

'int', otherwise Mongoose will store it as a 'double'.

const schema = Schema({ name: String, age: Number, rank: String });

const Model = mongoose.model('Model', schema);

await Model.create({ name: 'Will Riker', age: 29 });

// Finds the doc. Mongoose stores `age` as an int

let doc = await Model.findOne({ age: { $type: 'int' } });

doc.age = 29.5;

await doc.save();

// Does **not** find the doc: `age` is no longer an int

doc = await Model.findOne({ age: { $type: 'int' } });

// Finds the doc, `age` is now a double

doc = await Model.findOne({ age: { $type: 'double' } });

// Finds the doc, 'number' matches both ints and doubles.

doc = await Model.findOne({ age: { $type: 'number' } });

You can also invert the $type query selector using the $not query selector.

$not: { $type: 'string' } will match any value that is not a string, including

documents that do not have the property.

const schema = Schema({ name: String, age: Number, rank: String });

const Model = mongoose.model('Model', schema);

await Model.create({ name: 'Will Riker', age: 29 });

// Finds the doc, because Mongoose stores `age` as an int

const querySelector = { $not: { $type: 'string' } };

let doc = await Model.findOne({ age: querySelector });

// Finds the doc, because `doc` doesn't have a `rank` property

doc = await Model.findOne({ rank: querySelector });

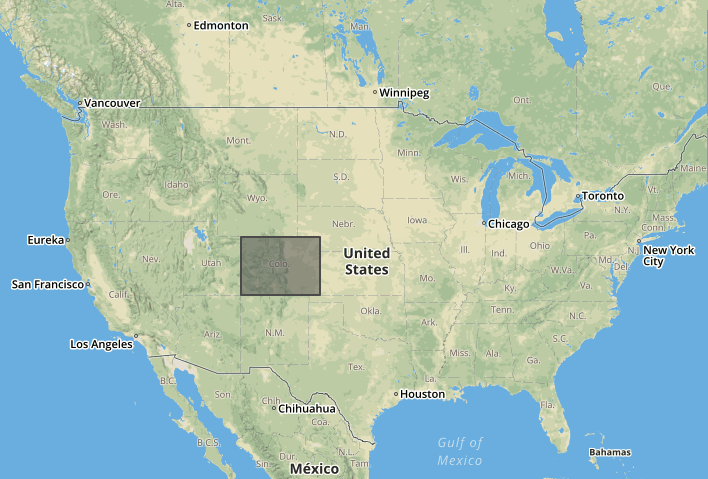

Geospatial operators are like comparison operators for geoJSON data. For

example, suppose you have a State model that contains all the US

states, with the state's boundaries stored as geoJSON in the location

property.

const State = mongoose.model('State', Schema({

name: String,

location: {

type: { type: String },

coordinates: [[[Number]]]

}

}));

// The US state of Colorado is roughly a geospherical rectangle

await State.create({

name: 'Colorado',

location: {

type: 'Polygon',

coordinates: [[

[-109, 41],

[-102, 41],

[-102, 37],

[-109, 37],

[-109, 41]

]]

}

});

The US state of Colorado is a convenient example because its boundaries are almost a geospatial rectangle, excluding the historical idiosyncracies of Colorado's borders. Here's what the above polygon looks like on a map:

MongoDB has 4 geospatial query selectors that help you query geoJSON data:

$geoIntersects$geoWithin$near$nearSphereThe $geoIntersects query selector matches if the document's value

intersects with the given value. For example, using $geoIntersects, you can

take a geoJSON point and see what State it is in. A point intersects with a

polygon if and only if the point is within the polygon.

// Approximate coordinates of the capital of Colorado

const denver = { type: 'Point', coordinates: [-104.9903, 39.7392] };

// Approximate coordinates of San Francisco

const sf = { type: 'Point', coordinates: [-122.5, 37.7] };

let doc = await State.findOne({

location: {

// You need to specify `$geometry` if you're using

// `$geoIntersects` with geoJSON.

$geoIntersects: { $geometry: denver }

}

});

doc.name; // 'Colorado'

doc = await State.findOne({

location: {

$geoIntersects: { $geometry: sf }

}

});

doc; // null

The $geoWithin query selector is slightly different than $geoIntersects: it matches

if the document's value is entirely contained within the given value. For example,

State.findOne() will not return a value with $geoWithin, because a polygon can't be entirely contained within a point.

However, if you have a City model where each city has a location that is a

geoJSON point, $geoWithin lets you find cities that are within the state of Colorado.

If the document value is a geoJSON point, $geoWithin and $geoIntersects are

equivalent. There's no way for a point to partially overlap with another geoJSON

feature.

const City = mongoose.model('City', Schema({

name: String,

location: {

type: { type: String },

coordinates: [Number]

}

}));

let location = { type: 'Point', coordinates: [-104.99, 39.739] };

await City.create({ name: 'Denver', location });

const colorado = await State.findOne();

const $geoWithin = { $geometry: colorado.location };

let doc = await City.findOne({ location: { $geoWithin } });

doc.name; // 'Denver'

// `$geoIntersects` also finds that Denver is in Colorado

const $geoIntersects = { $geometry: colorado.location };

doc = await City.findOne({ location: { $geoIntersects } });

Another neat feature of $geoWithin is that you can use it to find points that are

within a certain number of miles of a given point. For example, Denver is about 1000

miles away from San Francisco. The $centerSphere query operator lets you find

documents that are within 1000 miles of a given point:

const sfCoordinates = [-122.5, 37.7];

// $centerSphere distance is in radians, so convert miles to radians

const distance = 1000 / 3963.2;

const $geoWithin = { $centerSphere: [sfCoordinates, distance] };

let doc = await City.findOne({ location: { $geoWithin } });

// `doc` will be `null`

$geoWithin.$centerSphere[1] = distance / 2;

doc = await City.findOne({ location: { $geoWithin } });

The $geoWithin operator helps you find points that are within a certain distance

of a point, but those points may be in any order. The $near and $nearSphere

operators help you find points that are within a certain distance of a given point

and sort them by distance. The $near and $nearSphere operators require a

special '2dsphere' index.

The difference between $near and $nearSphere is that $nearSphere calculates

spherical distance (using the Haversine formula), but $near calculates

Cartesian distance (assuming the Earth is flat). In practice, you should use

$nearSphere for geospatial data.

const createCity = (name, coordinates) => ({

name,

location: { type: 'Point', coordinates }

});

await City.create([

createCity('Denver', [-104.9903, 39.7392]),

createCity('San Francisco', [-122.5, 37.7]),

createCity('Miami', [-80.13, 25.76])

]);

// Create a 2dsphere index, otherwise `$nearSphere` will error out

await City.collection.createIndex({ location: '2dsphere' });

// Find cities within 2000 miles of New York, sorted by distance

const $geometry = { type: 'Point', coordinates: [-74.26, 40.7] };

// `$nearSphere` distance is in meters, so convert miles to meters

const $maxDistance = 2000 * 1609.34;

const cities = await City.find({

location: { $nearSphere: { $geometry, $maxDistance } }

});

cities[0].name; // 'Miami'

cities[1].name; // 'Denver'

Array query selectors help you filter documents based on array properties.

$all: matches arrays that contain all of the values in the given array$size: matches arrays whose length is equal to the given number$elemMatch: matches arrays which contain a document that matches the given filterThe need for array query selectors is limited because MongoDB is smart enough to drill into arrays.

For example, suppose you have a BlogPost model with an array of embedded

comments. Each comment has a user property. You can find all blog posts

that a given user commented on simply by querying by comments.user.

let s = Schema({ comments: [{ user: String, text: String }] });

const BlogPost = mongoose.model('BlogPost', s);

await BlogPost.create([

{ comments: [{ user: 'jpicard', text: 'Make it so!' }] },

{ comments: [{ user: 'wriker', text: 'One, or both?' }] },

]);

const docs = await BlogPost.find({ 'comments.user': 'jpicard' });

docs.length; // 1

However, what if you want to find all blog posts that both 'wriker' and

'jpicard' commented on? That's where the $all query selector comes in.

The $all query selector matches arrays that contain all elements in the

given array.

await BlogPost.create([

{ comments: [{ user: 'jpicard', text: 'Make it so!' }] },

{ comments: [{ user: 'wriker', text: 'One, or both?' }] },

{ comments: [{ user: 'wriker' }, { user: 'jpicard' }] }

]);

// Find all blog posts that both 'wriker' and 'jpicard' commented on.

const $all = ['wriker', 'jpicard'];

let docs = await BlogPost.find({ 'comments.user': { $all } });

docs.length; // 1

// Find all blog posts that 'jpicard' commented on.

docs = await BlogPost.find({ 'comments.user': 'jpicard' });

docs.length; // 2

The $size operator lets you filter documents based on array length. For example,

here's how you find all blog posts that have 2 comments:

// Find all blog posts that have exactly 2 comments

const comments = { $size: 2 };

let docs = await BlogPost.find({ comments });

docs.length; // 1

The $elemMatch query selector more subtle. For example, suppose you wanted

to find all blog posts where the user 'jpicard' commented 'Make it so!'.

Naively, you might try a query on { 'comments.user', 'comments.comment' }

as shown below.

await BlogPost.create([

{ comments: [{ user: 'jpicard', text: 'Make it so!' }] },

{ comments: [{ user: 'wriker', text: 'One, or both?' }] },

{

comments: [

{ user: 'wriker', text: 'Make it so!' },

{ user: 'jpicard', text: 'That\'s my line!' }

]

}

]);

// Finds 2 documents, because this query finds blog posts where

// 'jpicard' commented, and where someone commented 'Make it so!'.

let docs = await BlogPost.find({

'comments.user': 'jpicard',

'comments.text': 'Make it so!'

});

docs.length; // 2

Unfortunately, that query doesn't work. The naive approach finds documents

where 'comments.user' is equal to 'jpicard' and 'comments.comment' is equal

to 'Make it so!', but doesn't make sure that the same subdocument has both

the correct user and the correct comment. That's what $elemMatch is for:

// `$elemMatch` is like a nested filter for array elements.

const $elemMatch = { user: 'jpicard', comment: 'Make it so!' };

let docs = await BlogPost.find({ comments: { $elemMatch } });

docs.length; // 1

MongoDB also provides several update operators to help you build up sophisticated

update parameters to updateOne(), updateMany(), and findOneAndUpdate().

Each update has at least one update operator.

In MongoDB, update operators start with '$'. So far, this book hasn't

explicitly used any update operators. That's because, when you provide an

update parameter that doesn't have any update operators, Mongoose

wraps your update in the $set update operator.

const schema = Schema({ name: String, age: Number, rank: String });

const Character = mongoose.model('Character', schema);

await Character.create({ name: 'Will Riker', age: 29 });

const filter = { name: 'Will Riker' };

let update = { rank: 'Commander' };

const opts = { new: true };

let doc = await Character.findOneAndUpdate(filter, update, opts);

doc.rank; // 'Commander'

// By default, Mongoose wraps your update in `$set`, so the

// below update is equivalent to the previous update.

update = { $set: { rank: 'Captain' } };

doc = await Character.findOneAndUpdate(filter, update, opts);

doc.rank; // 'Captain'

The $set operator sets the value of the given field to the given value. If

you provide multiple fields, $set sets them all. The $set operator also

supports dotted paths within nested objects.

const Character = mongoose.model('Character', Schema({

name: { first: String, last: String },

age: Number,

rank: String

}));

const name = { first: 'Will', last: 'Riker' };

await Character.create({ name, age: 29, rank: 'Commander' });

// Update `name.first` without touching `name.last`

const $set = { 'name.first': 'Thomas', rank: 'Lieutenant' };

let doc = await Character.findOneAndUpdate({}, { $set }, { new: true });

doc.name.first; // 'Thomas'

doc.name.last; // 'Riker'

doc.rank; // 'Lieutenant'

The $set operator is an example of a field update operator.

Field update operators operate on fields of any type, as opposed to array update operators or numeric update operators. Below is a list of the most common field update operators:

$set: set the value of the given fields to the given values$unset: delete the given fields$setOnInsert: set the value of the given fields to the given values if a new document was inserted in an upsert. Ignored if MongoDB updated an existing document.$min: set the value of the given fields to the specified values, if the specified value is less than the current value of the field.$max: set the value of the given fields to the specified values, if the specified value is greater than the current value of the field.The $unset operator deletes all properties in the given object. For example,

you an use it to unset a character's age:

const schema = Schema({ name: String, age: Number, rank: String });

const Character = mongoose.model('Character', schema);

await Character.create({ name: 'Will Riker', age: 29 });

const filter = { name: 'Will Riker' };

// Delete the `age` property

const update = { $unset: { age: 1 } };

const opts = { new: true };

let doc = await Character.findOneAndUpdate(filter, update, opts);

doc.age; // undefined

The $setOnInsert operator behaves like a conditional $set. It only sets the

values if a new document was inserted because of the upsert option. If upsert

is false, or the upsert modified an existing document rather than inserting

a new one, $setOnInsert does nothing.

await Character.create({ name: 'Will Riker', age: 29 });

let filter = { name: 'Will Riker' };

// Set `rank` if inserting a new document

const update = { $setOnInsert: { rank: 'Captain' } };

// If `upsert` option isn't set, `$setOnInsert` does nothing

const opts = { new: true, upsert: true };

let doc = await Character.findOneAndUpdate(filter, update, opts);

doc.rank; // undefined

filter = { name: 'Jean-Luc Picard' };

doc = await Character.findOne(filter);

doc; // null, so upsert will insert a new document

doc = await Character.findOneAndUpdate(filter, update, opts);

doc.name; // 'Jean-Luc Picard'

doc.rank; // 'Captain'

$min and $max set values based on the current value of the field. They're

so named because if you use $min, the updated value will be the minimum of the

given value and the current value. Similarly, if you use $max, the updated value

will be the maximum of the given value and the current value.

await Character.create({ name: 'Will Riker', age: 29 });

const filter = { name: 'Will Riker' };

const update = { $min: { age: 30 } };

const opts = { new: true, upsert: true };

let doc = await Character.findOneAndUpdate(filter, update, opts);

doc.age; // 29

update.$min.age = 28;

doc = await Character.findOneAndUpdate(filter, update, opts);

doc.age; // 28

Numeric update operators can only be used with numeric values. Both the value in the database and the given value must be numbers.

$inc: increments the given fields by the given values. The given values may be positive (for incrementing) or negative (for decrementing)$mul: multiplies the given fields by the given values.// Increment `age` by 1 using `$inc`

const filter = { name: 'Will Riker' };

let update = { $inc: { age: 1 } };

const opts = { new: true };

let doc = await Character.findOneAndUpdate(filter, update, opts);

doc.age; // 30

// Decrement `age` by 1

update.$inc.age = -1;

doc = await Character.findOneAndUpdate(filter, update, opts);

doc.age; // 29

// Multiply `age` by 2

update = { $mul: { age: 2 } };

doc = await Character.findOneAndUpdate(filter, update, opts);

doc.age; // 58

Array update operators can only be used when the value in the database is an array. They let you add or remove elements from arrays.